GPT 정리

1. GPT (Generative Pre-Training)

• goal: learn a universal representation

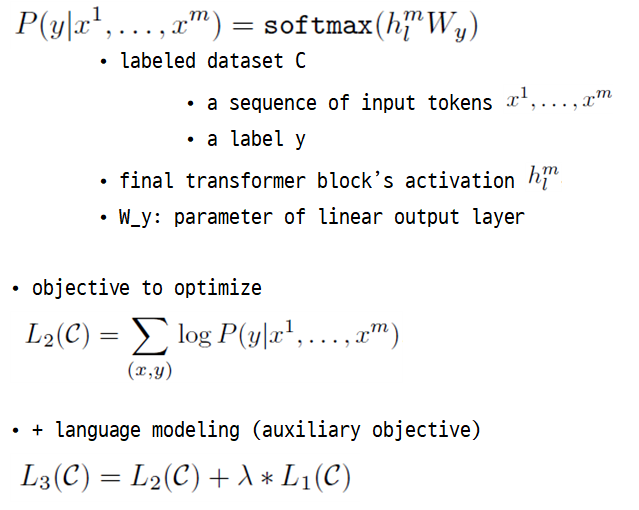

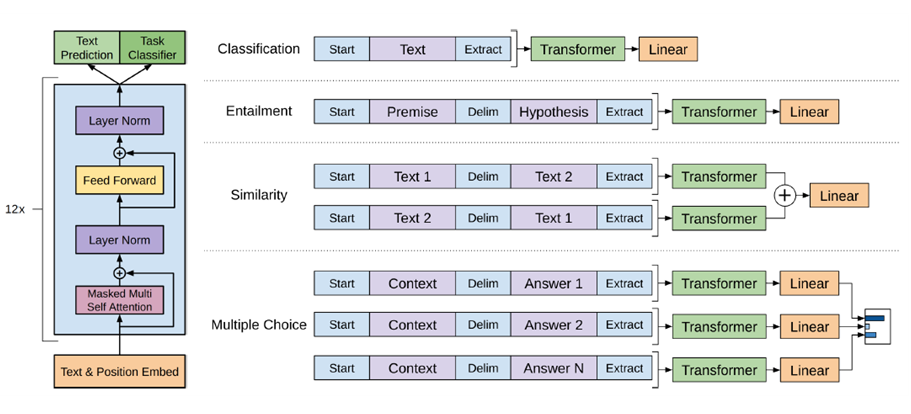

• generative pre-training (unlabeled text) + discriminative fine-tuning (labeled text)

1.1. Unsupervised pre-training

1.2. Supervised fine-tuning

2. GPT-2

• difference from BERT

|

|

GPT-2 |

BERT |

|

Direction |

uni-directional auto-regression mask future tokens |

bi-directional |

|

Tokenizer |

BPE(Byte-pair Encoding) |

WordPiece Tokenizer |

|

Fine-Tuning |

X (zero-shot) |

O |

|

Transformer |

Decoder |

Encoder |

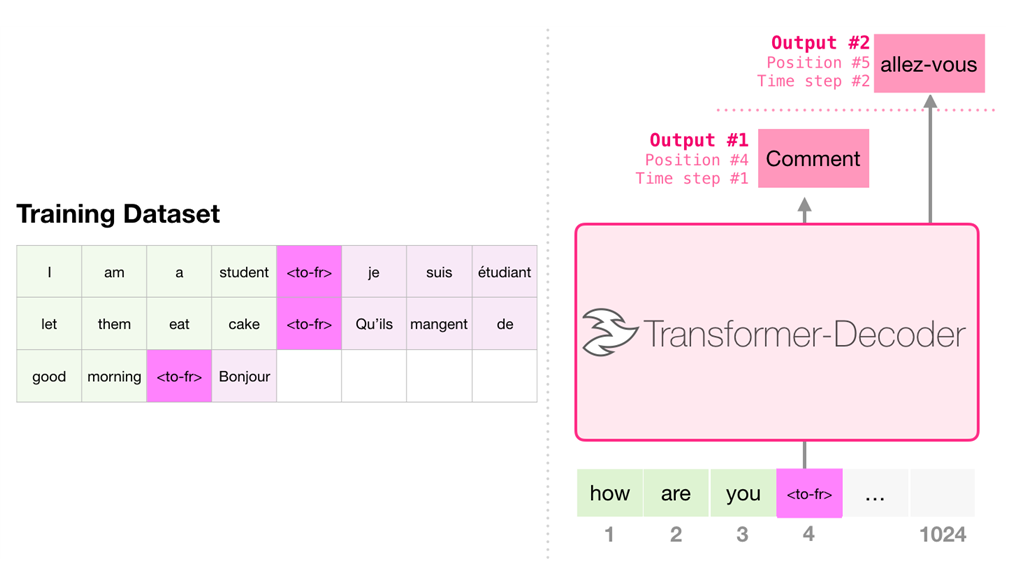

* auto-regression: after each token is produced, that token is added to the sequence of inputs. And that new sequence becomes the input to the model in its next step

* Tokenizer 비교: https://lovit.github.io/nlp/2018/04/02/wpm/

* zero-shot: not trained on any of the data specific to any of these tasks, only evaluated on them as a final test

• translation w/o encoder

import torch

from transformers import GPT2Tokenizer, GPT2LMHeadModel

tokenizer = GPT2Tokenizer.from_pretrained('gpt2')

model = GPT2LMHeadModel.from_pretrained('gpt2')

input_ids = tokenizer.encode("Are you there?", return_tensors='pt')

greedy_output = model.generate(input_ids, max_length=50)

print(tokenizer.decode(greedy_output[0], skip_special_tokens=True))

"""

[OUTPUT]

Are you there?

I'm here to help you.

"""

참고

GPT original paper

https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf

GPT-2 original paper

The Illustrated GPT-2 (Visualizing Transformer Language Models)

http://jalammar.github.io/illustrated-gpt2/

GPT-2 OpenAI blog

https://openai.com/blog/better-language-models/

Text generation code

https://huggingface.co/blog/how-to-generate

GPT-3 original paper

https://arxiv.org/abs/2005.14165

GPT-3 blog post

GPT-3 paper explained (youtube)

https://www.youtube.com/watch?v=p24JUVgDkQk

https://www.youtube.com/watch?v=SY5PvZrJhLE