[DL Wizard] Derivative, Gradient and Jacobian 번역 및 정리

2020. 2. 5. 16:53ㆍnlp

반응형

Derivative, Gradient and Jacobian - Deep Learning Wizard

Derivative, Gradient and Jacobian Simplified Equation This is the simplified equation we have been using on how we update our parameters to reach good values (good local or global minima) \theta = \theta - \eta \cdot \nabla_\theta \theta: parameters (our t

www.deeplearningwizard.com

parameters = parameters - learning_rate * parameters_gradients

→ 이 과정은 2가지 과정으로 쪼갤 수 있음

1) Backpropagation : gradient 구하기

2) Gradient descent : gradient를 이용해 parameter 갱신하기

Gradient, Jacobian, Generalized Jacobian

- Gradient : (input) vector → (output) scalar

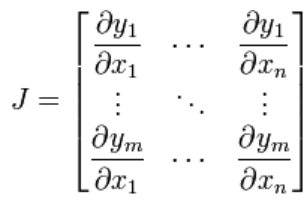

- Jacobian : (input) vector → (output) vector

- Generalized Jacobian : (input) tensor → (output) tensor

반응형

'nlp' 카테고리의 다른 글

| [DL Wizard] Optimization Algorithms 번역 및 정리 (0) | 2020.02.05 |

|---|---|

| [DL Wizard] Learning Rate Scheduling 번역 및 정리 (0) | 2020.02.05 |

| [Hyper-parameter Tuning] 하이퍼 파라미터 튜닝 (0) | 2020.02.05 |

| [DL Wizard] Forwardpropagation, Backpropagation and Gradient Descent with PyTorch 번역 및 정리 (0) | 2020.02.04 |

| [DL Wizard] Feedforward Neural Network with PyTorch 번역 및 정리 (0) | 2020.02.04 |